Slopwatch: linuxsecurity.com and hamradio.my (in Planet Ubuntu) Are at It Again With LLM Slop About "Linux"

"Slopwatch" is a never-ending and much-needed (due to growing urgency) series that aggregates several new examples of LLM slop (i.e. paragraphs stuck together with other paragraphs whose words are strung together by a statistic model built by scanning "tokens", not learning anything at all about meanings and barely grasping grammar, either). We focus on that which impacts "Linux" or related topics because otherwise the scope of the job would be endless. We informally made it our 'job' to identify which sites became slopfarm and then watch them closely, giving them "another chance" (to correct their ways after 'experimenting' in LLMs like LinuxInsider did).

Let's start with hamradio.my (aggregated in Planet Ubuntu) because some of its output seems legitimate, some is evidently a mixture, and some is very obviously LLM slop. Consider this latest one:

Even before feeding that into a scanner it seemed rather obvious that this was LLM slop. And it was:

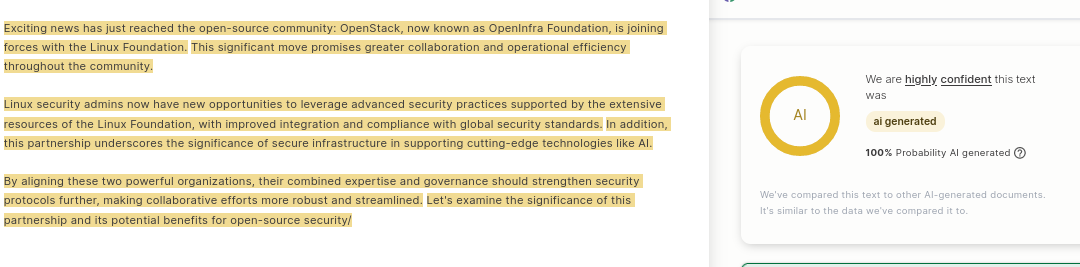

linuxsecurity.com has long been a slopfarm and its latest output seems like plagiarism of many pieces from or about the Linux Foundation.

Here is the machine-generated puff piece:

No room for doubt.

That's just a sample of examples of fake 'articles' about "Linux". Surely many more will come and we'll report on what we find.

LLM slop does not save time; it does not have intelligence either. It does not make anyone smarter and it mostly wastes people's time by distracting them from real articles, composed by people who actually comprehend what they write about (paragraphs that organise thoughts and articulate them clearly).

We should also revisit the difference between shorten and summarise, as many sites out there falsely claim that LLMs can save time not just for fake writers but also real readers. Without actually understanding the message, a machine cannot summarise that message, it can just cull ("shorten") parts of it, so there's no real, viable use case for LLMs. Over 99% of the alleged "use cases" are lies or false marketing; it's effective at misleading shareholders though, using the veneer of "novelty" or "future promise". They don't tell those investors about legal risks and bad reputation associated with plagiarism. █