Image Fusion is Not 'AI' (LLMs Aren't Either)

The term "hey hi" (AI) is being thrown around way too much these days. They say that businesses perishing (and laying off workers just to survive) is due to an "era of "hey hi"... or "hey hi" revolution"... they say taxpayers must also bail out failing companies because of some nonsensical and fictional thing - a panic about an "hey hi" arms race, whatever that even means. It's mostly meaningless of course, this narrative is made for bailouts or massive defence contracts, rescuing or shoring up failing companies. They start calling everything that accelerates some operations (e.g. GPUs) "hey hi" and even items like garments are sold as "hey hi" or marketed as "hey hi". It has gotten outright ridiculous and the media that helps with this hype is funded by these "hey hi" grifters. In other words, a lot of the media intentionally participates in lies. It is conning the readers/viewers.

There's something I've long been eager to say about so-called "hey hi" images. Like LLMs, they do not have intelligence, they just select items from labeled catalogues, add some stochastic element (so that it's not repeatable, not deterministic, it can create a different output each time), and then spew something out, mildly resembling what the prompter requested. So-called "hey hi" video would do the same on a frame-by-frame basis with adequate flow (cross-frame continuity). There's not much innovation there, it's just brute force. Voice synthesis and LLMs for generation of text are un-existing and hardly novel.

It happens to be the case that my Ph.D. is connected to this because we worked on image synthesis based on training data (we didn't call that "hey hi" more than 20 years ago) and I saw many of the same things they now call "hey hi" images even in 2003 (statistical models in generative mode). But what the current grifters do under the guise of "hey hi" is, they're strip-mining collective arts or the Commons for fake 'originals'. The whole LLM nonsense does the same to code.

Someone in IRC (active yesterday) posted a link to some article about "hey hi" images. It was about CG or autocomplete or state-of-the-art plagiarism (disguised or defended as "fair use"), not about "hey hi" at all. I said "hey hi" images was the wrong term as "it's just some CG" as "they isolate objects" ("some are labeled already") and "then do fusion of objects". I said "this is not ML/AI, it's BS [and] state-of-the-art plagiarism." The headlines about those things play alone with the lies, "so the media misframes the issue," I said. And "how are we to expect real journalism when they cannot even properly explain what's done? Who funds the media?"

psydroid said "this was always going to happen, wasn't it? [...] with all the possibilities they were going to target the lowest common denominator and extract maximum monetary value out of it..."

Then "they pretend "the machine did it!!" I argued, "then their whistleblowers die" (the insiders who said this was plagiarism all along).

That's not to say the fusion is unimpressive. We just need to understand what those blackboxes do. They basically put together many images scraped from the Web and fuse them together with some digital 'duct tape'. Some of the results can be realistic-looking, but what's the use case? Scams? Fake news? Doctoring evidence? Also, what's the "business case"? Enabling scams and disinformation?

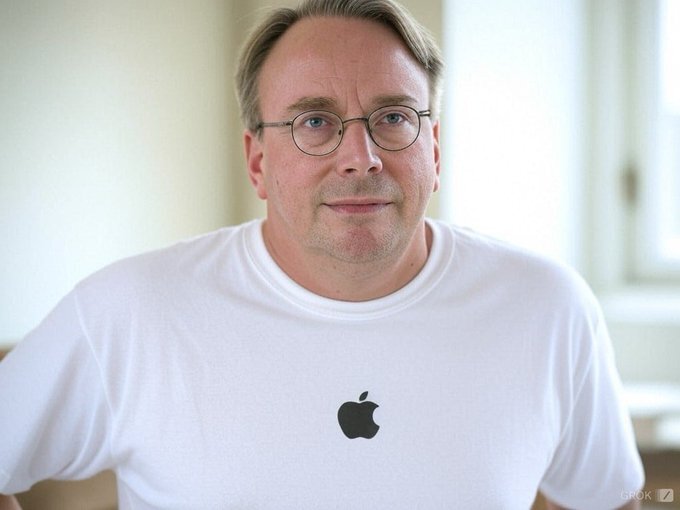

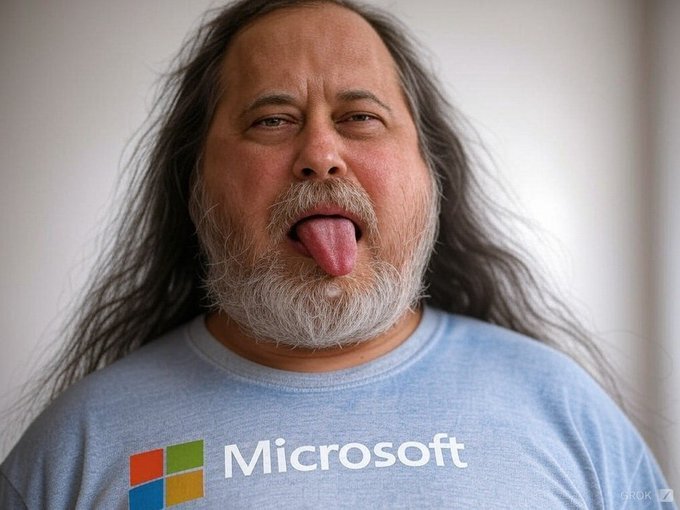

Amusing one another less than a week ago in Twitter ("X"), some people had made some fake images, including:

That last one can be used to connect RMS to actual pedophiles, even if the "photo" is fictional.

Does society need garbage like that? Such fakes can (and always could) be done by a digital artist, it's just a little more expensive and time-consuming. █