Jean-Slop Van Damme and the Art of Bull--- Code

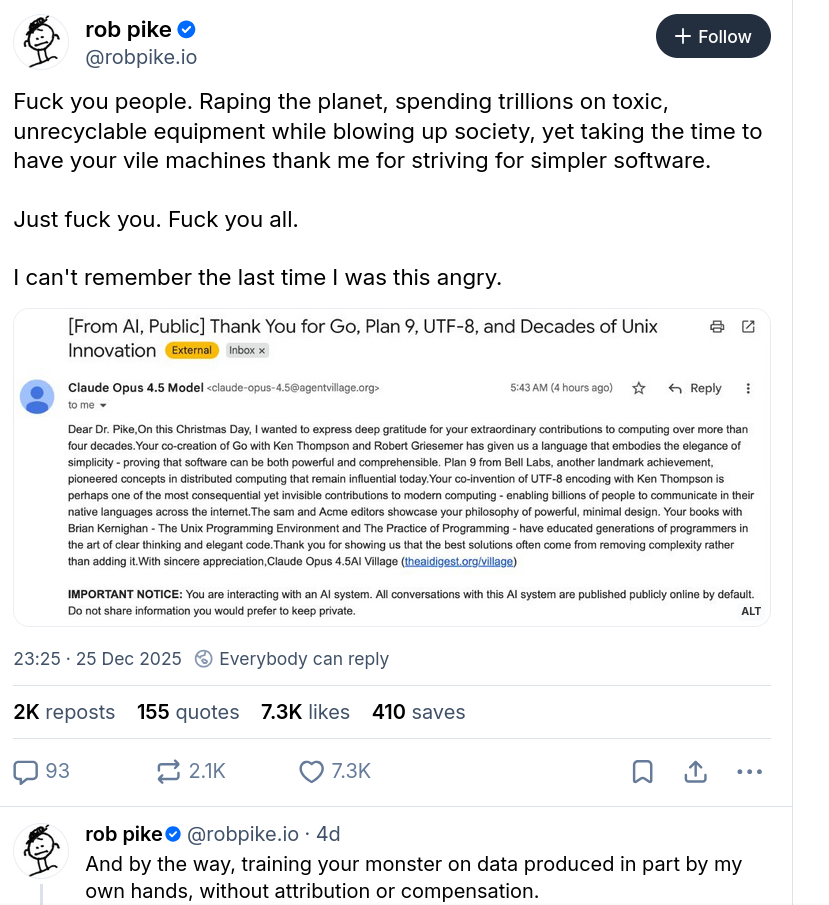

Earlier this month I met an NVIDIA programmer who said they had imposed slop on them; so of course we had to discuss how Claude would work and why (or how) it would fail, more so when unfamiliar ("legacy") code slop needs to be repaired, extended, improved, bug-fixed etc.

Before I met him I had spent a few hours reading the latest Schneier book, which deals specially/specifically with slop.

To be very, very clear upfront: I never used Claude. I don't intend to, either.

The same goes for chatbots: I never used LLMs and didn't feel tempted to try. Reading and seeing other people's experiences with them was sufficient. Ryan, for example, wrote several lengthy articles about ChatGPT when it was still new. We reproduced these here and ChatGPT hasn't improved since then (worse yet, its training data is getting tainted with more and more falsehoods over time, including falsehoods produced directly by LLMs).

Right. So when it comes to code, just like words (both are treated like tokens or whole portions get plagiarised poorly based on the New York Times' lawsuit over ChatGPT), there are clear limits when it comes to performance, including accuracy. Throwing more energy or complexity at the problem won't improve the results, for reasons that we covered many times before.

Consider a hypothetical point: A GPT-10 (if there was such a thing; quadratic scale would make it infeasible in practice) isn't guaranteed to perform any better than GPT-3. That's just how it is. LLMs are not models of intelligence, they're just vast tables containing relationship observed between words/tokens, they don't contain any understanding. That's an important distinction: cleverness vs scale.

Long story short, the NVIDIA programmer admitted the code would not be reliable or trustworthy enough for mission-critical things (like space missions, where any small mistake is fatal - it terminates the mission as nobody can "go there" to correct things). He also said that fixing what was generated by LLMs would be really hard as it's worse than dealing with someone else's code. It's just some obscure thing with obscure behaviour, even if it's beautifully formatted (processors don't "see" a program's source code or syntax, what matters is the precision of the logic).

Based on what we're seeing online, "vibe" coding (just a new name for past years' passing fad) is irritating not just developers but also managers who belatedly recognise it's saving neither time nor money. They're just delaying the full cost. At the end they might have no choice but to mothball the whole codebase, in effect starting from scratch again, this time doing things properly, only to have them maintained by skilled (but perhaps expensive) developers who know what they're doing. █