Summary: Too many Web sites have turned themselves into computer programs (with a lot of proprietary and sometimes malicious JavaScript) rather than pages to be rendered

Google Analytics is not new. I remember the day Google bought Urchin and turned it into a centralised, over-the-Web spying monster. I wrote quite a lot about it in USENET, as did others (at the time). USENET is now known to many as "Google Groups" (after Google bought yet another company) and the decentralised nature of the original Web is largely gone by now. Platforms like Pleroma, Mastodon, Diaspora and so on have attempted to tackle it, but is it too late? Maybe.

Nowadays when we visit a Web site we rarely end up accessing just one single site (or domain). It's a bloated mess that is being justified poorly, owing to devices getting more powerful, browsers adding more features, and Webmasters forgetting to K.I.S.S.

Techrights has behaved and has looked almost identical since 2006. We didn't get seduced into sending special fonts for visitors to download and render at their end. Sites aren't posters and it's the substance that counts, not looks.

I am sad to say that nowadays I literally dread and thus hesitate to even enter (click a link to) certain GNU/Linux sites. Phoronix recently added spyware to all the pages (Michael told me that the parent company pressed for it), causing it to slow down and do all sorts of mysterious things to the browser of the visitors (it's proprietary code, thus impossible to tell what's going on). To Phoronix's credit, unlike some other GNU/Linux sites it did not go over the top with various other scripts (sometimes connecting the visitors to

dozens of different sites/spies).

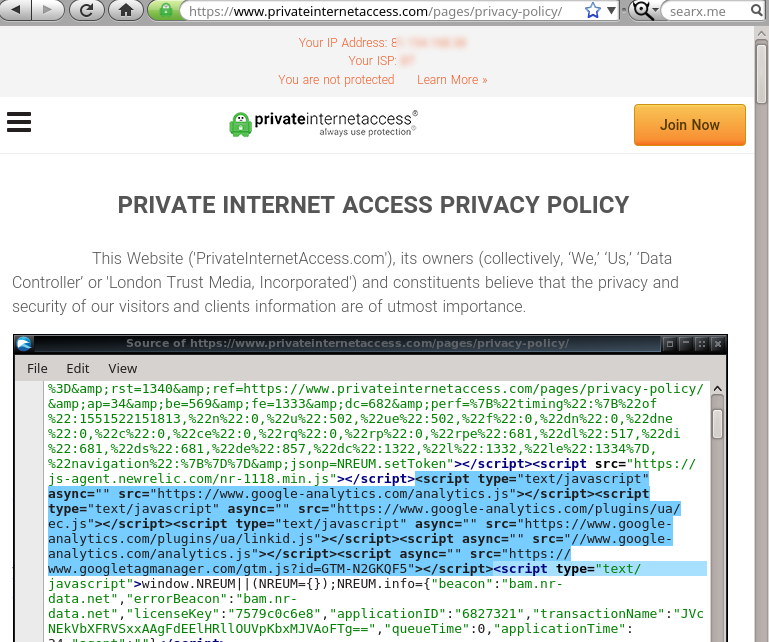

Disappointingly enough,

Linux Journal keeps bragging about privacy (to help its parent company, a VPN company) make more sales or add subscribers when both site --

Linux Journal and the parent company (PIA) -- put Google Analytics in

all pages. How can they pretend to value privacy and preach to that effect when sending all the log data (and beyond) to Google?

Our main drawback here is that we don't support encryption in page-serving (that would slow things down), but that mostly means that one's ISP will be able to tell what pages, not just what site, get visited. Some time in the future we might adopt containers and with the migration we might also add HTTPS protocol. One sure thing is, such adoption can limit reach/compatibility, raise requirements at the user's side, increase maintenance overhead, and even cause 'downtime' (expired certificates). In the interests of keeping the site light and easily accessible we will, for now, avoid JavaScript.

⬆