The Great "AI" CON Explained by Dr. Andy Farnell

Dr. Andy Farnell published this long article about 4 hours ago:

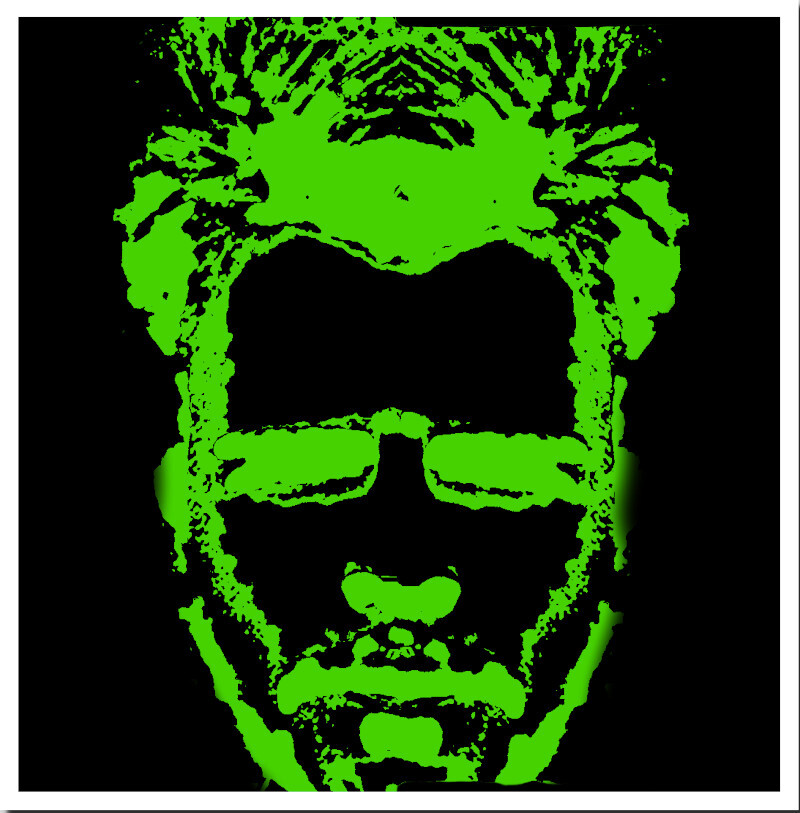

Davos 2026 was a pantomime, an "Oh yes it is! Oh no it isn't" difference of opinions on "AI". On one side there are folks who've invested maybe a hundred trillion in what's probably a lemon. On the other are scientists claiming an existential threat to life on Earth.Given such strong biases, a concise way to understand the great "AI" CON is that "we see what we want to see, we hear what we want to hear".

Already, perceptual psychologists, magicians and confidence tricksters will be sitting up and guessing where I'm going with this…

The emperor's new computer

"AI" in the guise of language models (LLMs) is a new type of computing… psychologically.

It is not technically different. It still relies on boring old transistors. Deterministic state transitions happen in matrix processors following the age-old laws of electronics, and getting hot for it. It rests on very traditional hardware. There's nothing magical, spooky, or "quantum" going on.

A deterministic computer is, at heart, a simple series of logic gates, and given a well formed problem like adding two numbers it completes in a finite time and always gives the same answer.

Slop in a nutshell:

This is not so much a GIGO - Garbage In Garbage Out problem as a Not Knowing What The Fuck You're Doing With A Computer Problem.And there's a lot of that going about at the moment.

On propaganda disguised and sold as "intelligence":

This is where we're heading with the project of language models as computing utilities.You provide an a priori set of biases, assumptions, cultural norms, prejudices, hidden desires and hopes to prime the machine. It mixes them with another set of biases, "guardrails" and political agendas, invisibly baked in by its owner/creator, and it spurts out what it "thinks you need to hear".

Specifically on LLMs:

That's not computing. That's not even close to computing. Impressive as language model inference may be, it's some strange instrument that functions on the border between propaganda, influence, advertising, perception management, reinforcement of orthodoxy and dogma, dressed up as an objective oracle. Deployed widely its a perfect psyops weapon. But only if people can be tricked into taking it seriously. Like the lie behind the lie detector it's a great bit of pseudoscience that works so long as people believe in it.Those who understand the mathematics of language models (Markov chains, attention driven transformers etc) still struggle to explain "How can models 'answer' a question when they don't have any actual knowledge encoded?". The standard retort is that the knowledge is "implicit" in the training examples and so is "found" by statistical association. My way of expressing it, at the moment is; LLM don't answer questions.

They fulfil the expectations implied in your question.

I think this formulation, in terms of expectation, is more useful and powerful. LLMs perform a dance to try to entertain you and satisfy what it is they "think" you want to hear.

Here's the idea that LLMs are basically advertisers of sorts: "That's why, as Bruce Schneier agrees with us, the fact that OpenAI has started adding explicit advertising to ChatGPT is moot. Advertising is already implied in the very structure of "AI". With a slight adjustment to the interpretation of your question, ChatGPT is already waiting to offer all kinds of helpful product recommendations, or more subtly steer you away from ideas that are not in the interests of its masters." █

Image source: Rorschach Computing