Summary: Behind gates and bars governments and corporations put people, based on secret code or code nobody really understands (and which few technology oligarchs like Bill Gates profit from)

Summary: Behind gates and bars governments and corporations put people, based on secret code or code nobody really understands (and which few technology oligarchs like Bill Gates profit from)

THE TURING MACHINE is a very old concept and I'm lucky to have studied where it all started. Alan Turing was a master mathematician, whose state masters basically killed him. He's best remembered for Turing machines, not for helping to win the war against Nazis. The Turing machine predates actual computers (in the sense that we call them that). It was studied extensively for many decades, even in recent decades (Marvin Minsky did extensive work on that). Scientists/researchers explored the level of complexity attained by various forms/variants of Turing machines with various parameters (degrees of expressiveness). The more features are added, the more complicated the systems become and the more difficult to understand they will get (grasping the underlying nature from a purely mathematical perspective, not ad hoc criteria). As computer languages became more abstracted (or "high level"), the less touch programmers had of what goes on at a lower (machine) level.

With machine learning (nowadays easy to leverage owing to frameworks with trivial-to-use interfaces), the ways in which computer tasks are expressed further distance the human operator from the behaviour of the machine. Outputs and inputs are presumed valid and neutral, but there may be biases and subtleties, raising ethical concerns (for instance, reinforcing the biases of human-supplied training sets). It's quite a 'sausage factory', but it is marketed as "smart" or "big data" or "data cloud" (or "lake"). The buzzwords know no boundaries...

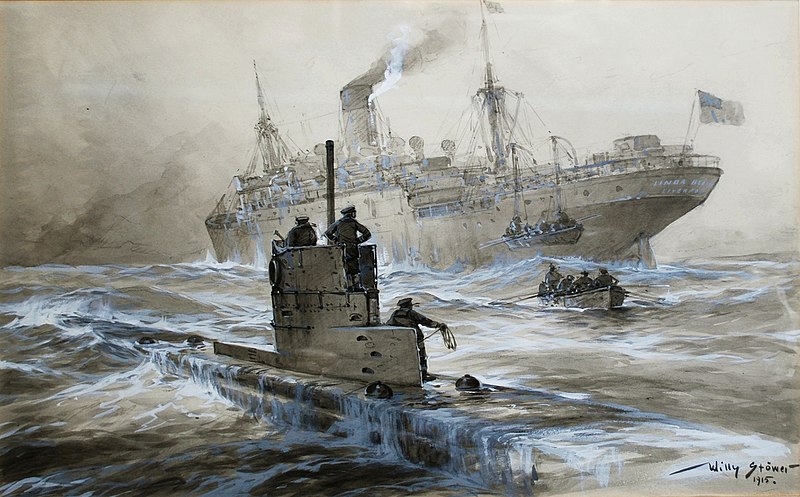

"Outputs and inputs are presumed valid and neutral, but there may be biases and subtleties, raising ethical concerns (for instance, reinforcing the biases of human-supplied training sets)."Let's use a practical example to further elucidate this. A long time ago someone could construct (even with pen and paper) a logical, deterministic state machine to compute someone's exam score, probably based on some human-supplied inputs such as pertinent marks. There was no effort made at syntactic analysis or natural language interpretation. When Turing worked on decrypting German codes he wrote simple programs (along with colleagues) to decipher some patterns. Eventually they managed to crack it; they identified repetitive terms and sort of reverse-engineered the encoder to come up with a decoder (reversing the operation). Back then things were vastly simpler, often mechanical, so figuring out how to scramble and unscramble messages probably wasn't too hard provided one lays his/her hands on the communication equipment. Submarines (U-boats) had those... as command and control operations need such tools to privately (discreetly) coordinate actions at scale. Discrete maths for discreet communications?

As computers 'evolved' (scare quotes because progress nowadays does not beget improvement, except for the true 'masters' of those computers, not the users) we lost 'touch' or 'sense' of the code. Levels of abstraction made it almost infeasible to properly understand programs we write and/or use. "To paraphrase someone else," an associate noted a few hours ago, "newer is not better, different is not better, only better is better."

The GNU Project started when I was a year old. Back then, as we recalled in recent Techrights posts with old videos about UNIX, computer systems were simplified by breaking down computational tasks into atomic parts, where inputs and outputs could be 'piped' from one program to another. Each and every program could be studied in isolation, improving the overall understanding of what goes on (that helps debugging, as well). Prior to UNIX, core systems had generally become unmaintainable and too messy (hard-to-maintain blobs), according to the 'masterminds' of UNIX (is this still a permissible and appropriate term to use? Was UNIX their slave?). With things like IBM's systemd (developed on cryptic Microsoft servers with NSA access), we're moving in the opposite direction... G[I]AFAM is ENIGMA.

The GNU Project started when I was a year old. Back then, as we recalled in recent Techrights posts with old videos about UNIX, computer systems were simplified by breaking down computational tasks into atomic parts, where inputs and outputs could be 'piped' from one program to another. Each and every program could be studied in isolation, improving the overall understanding of what goes on (that helps debugging, as well). Prior to UNIX, core systems had generally become unmaintainable and too messy (hard-to-maintain blobs), according to the 'masterminds' of UNIX (is this still a permissible and appropriate term to use? Was UNIX their slave?). With things like IBM's systemd (developed on cryptic Microsoft servers with NSA access), we're moving in the opposite direction... G[I]AFAM is ENIGMA.

"With things like IBM's systemd (developed on cryptic Microsoft servers with NSA access), we're moving in the opposite direction..."A U-boat in German is "U-Boot" (literally!) and there has just been a new release of a project with the same name (U-Boot v2020.10). Who or what is that an homage to? Many actual victims aboard passenger boats might find that vastly more offensive than "master"...

Yes, there's the pun with the word "boot" in it; but they took it further, as Wikipedia notes: "The current name Das U-Boot adds a German definite article, to create a bilingual pun on the classic 1981 German submarine film Das Boot, which takes place on a World War II German U-boat."

The project turns 21 next week.

Wanna know what's vastly more racist than the term "master" (on its own)? Proprietary software.

Wanna know what's vastly more racist than the term "master" (on its own)? Proprietary software.

Proprietary software developers strive to hide their mischief, or sometimes racism, by obfuscating things. Hours ago someone sent us this new article entitled "Racist Algorithms: How Code Is Written Can Reinforce Systemic Racism" (it's from Teen Vogue).

"Of course," it notes, "individual human decisions are often biased at times too. But AI has the veneer of objectivity and the power to reify bias on a massive scale. Making matters worse, the public cannot understand many of these algorithms because the formulas are often proprietary business secrets."

"Proprietary software developers strive to hide their mischief, or sometimes racism, by obfuscating things.""For someone like me," it continues, "who has spent hours programming and knows firsthand the deep harm that can arise from a single line of code, this secrecy is deeply worrisome. Without transparency, there is no way for anyone, from a criminal defendant to a college applicant, to understand how an algorithm arrived at a particular conclusion. It means that, in many ways, we are powerless, subordinated to the computer’s judgment."

Nowadays the Donald Trump regime uses computers to classify people, either arresting them, sometimes killing them, sometimes 'only' kidnapping them using goons in unmarked vans. So those so-called 'Hey Hi!' algorithms can be a matter of life and death to many. Ask "Old Mister Watson" how IBM became so big so fast...

IBM has not improved since (example from 2018), only the marketing improved. They blame not secrecy but mere words; they assure us that IBM fights against racism while doing business with some of the world's most oppressive regimes (and rigging bids to 'win').

To properly understand why proprietary code is so risky consider what happens in turnkey tyrannies to people who are flagged as "bad" (rightly or wrongly); many get arrested, some get droned overseas (no opportunity to appeal their computer-determined classification), and the companies responsible for these injustices -- sometimes murders -- talk to us about "corporate responsibility".

"Computers that are 'code prisons' or black boxes would not only harm black people (putting them in small boxes or forcibly sterilising them as IBM would gladly do for profit)."For computers to be trustworthy again two things need to happen: 1) computers need to become simpler (to study, modify etc.) again. 2) the code needs to be or become Free software. Anything else would necessarily or inevitably be a conduit for mistrial/injustice, as soon as it's put in immoral hands with unethical objectives. The FSF recently warned about trials (or mistrials) by proprietary software. COVID-19 made that a lot more pressing an issue. We're told to trust private technology companies as intermediaries (whose business objectives may depend on the outcomes).

Computers that are 'code prisons' or black boxes would not only harm black people (putting them in small boxes or forcibly sterilising them as IBM would gladly do for profit). Maybe it's perfectly appropriate to increasingly (over time) associate proprietary software companies with prisons. Microsoft literally helps build prisons for babies, for being born of the 'wrong' race or nationality. How many people conveniently forgot the significant role GitHub (also a proprietary prison) plays in that...

But hey, this month GitHub drops the word "master"; it makes all the difference in the world, right? Dina Bass has just helped them with more of that tolerance posing in the same publication that helped distract from the ICE debacle using fake news about "Arctic vault". ⬆